For every Google Analytics user it is important to distinguish the internet traffic of a website – the real from the artificial one. There are computer programs designed to perform automatic tasks over the Internet, called Bots, which can generate a lot of the traffic on a website. So, in this article you will learn what you can do, to exclude that generally unwanted traffic.

At the end of last year, there were official reports which state that only around “50% of web traffic” comes from real human beings. That is a problem, especially with so many “Bots” around that can hinder you in finding out what is the real traffic for any of your websites.

Internet Bots are designed to complete simple and repetitive tasks on an automatic basis, which are tedious or impossible for people to do. The largest use of such “Bots” is in Web Spidering. A “Spider”, also known as “Web crawler” is a script that fetches, analyzes and files specific information from web servers much faster than any human being can do. It is called that because it crawls over the Web.

In the past an efficient way to stop the influence of bots over traffic statistics was for Google Analytics to use JavaScript, as bots couldn’t. Nowadays there are even “smart” bots, which can successfully use JavaScript, and bypass that prevention technique in Google Analytics.

Besides such bots, there are malicious ones. Some bots are designed to cause “Denial of Service (DoS)” attacks, while others spam unrelated messages on your sites with link that can contain malicious content, which are other valid reasons not to want bot-crawlers on your web pages.

Here are 5 methods to exclude all of the bot generated traffic from Google Analytics:

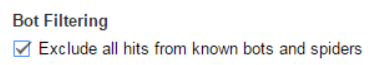

Method #1 – Enable Bot Filtering from the Admin Panel

There is a checkbox inside Google Analytics that can be ticked to remove known bots. It is located inside the “Admin” panel, under “View” settings and the checkbox is named “Bot Filtering”. It is advised to first make a Test View, before leaving the box checked in the main reporting View. In that way you can spot what differences you will spot in your data collection results.

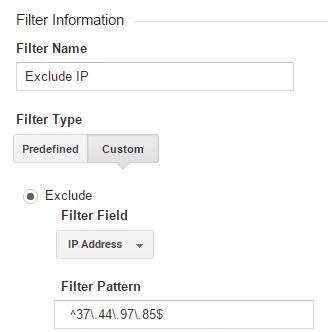

Method #2 – Filter Specific IP Addresses

IP addresses are not displayed in Google Analytics reports. Neither are they available to be seen through JavaScript by default, but you should be able to configure and turn on that feature on your site with considerable ease. After you have recovered all IP addresses that you want to exclude from being analyzed in internet traffic data, it is time to block those. You can do that by using the “View Filters” menu inside Google Analytics.

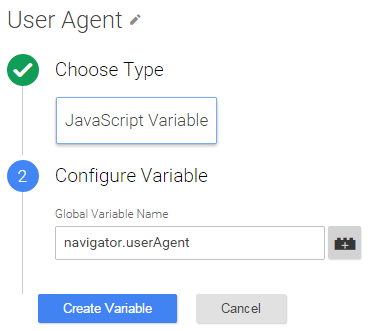

Method #3 – Adding a JavaScript Variable for User Agents

Even if you block specific IPs, some bots can use multiple IP addresses and switch between them. With the help of “Google Tag Manager” is possible to pass all “User Agent” strings into Google Analytics as a custom dimension. Afterwards, you can exclude bots’ sessions.

Create a “Custom Dimension” in the “Admin” panel of Google Analytics. Make sure the name is “User Agent” and make the scope “Session”. Leave “Index” untouched for now.

In Google Tag Manager set a new “JavaScript Variable” with navigator.userAgent value.

Set a custom variable slot for your “Google Analytics Pageview Tag”, using the above-mentioned “Index”. Enter the {{User Agent}} variable in “Dimension Value”.

In “Admin > View > Filters” you can eliminate “User Agents” which are known to be bots, due to their weird behavior, like users having repeat bounce rates or other users having hundreds of visits per day.

Method #4 – Add a CAPTCHA Requirement

To make things more difficult for bots that are still entering your site despite the previous precautionary measures taken, you should use some sort of a “Captcha”. It is recommended you use Google’s own “reCAPTCHA” service and the newer variant called “noCAPTCHA”. It detects human behavior that is typical, including mouse usage, allowing for people not having the need to enter a captcha message at all.

Method #5 – Require Users to Validate Their Emails

Users should be required to enter a valid email address, check their email, and click on the confirmation link with a message you said. There are very sophisticated Bots that can even do that, so one final thing you should do is to add a “reCAPTCHA” here too. Such one with image recognition:

This should sum up the most effective ways to exclude all hits from Internet Bots and Spiders in Google Analytics for your site(s). You should see a noticeable change in your data collection analysis results, after implementing each and every method described above. And you will also prevent DDoS attacks from malicious bots as well. So, to sum up, all these methods are a viable way to fight bots and are necessary for every Google Analytics user.