DeepSeek, a fast-growing Chinese artificial intelligence (AI) startup that has recently gained widespread attention, inadvertently left one of its databases exposed online. This security lapse could have given cybercriminals access to highly sensitive information.

According to security researcher Gal Nagli from Wiz, the misconfigured ClickHouse database granted full administrative control, making it possible for unauthorized users to access internal data without restrictions.

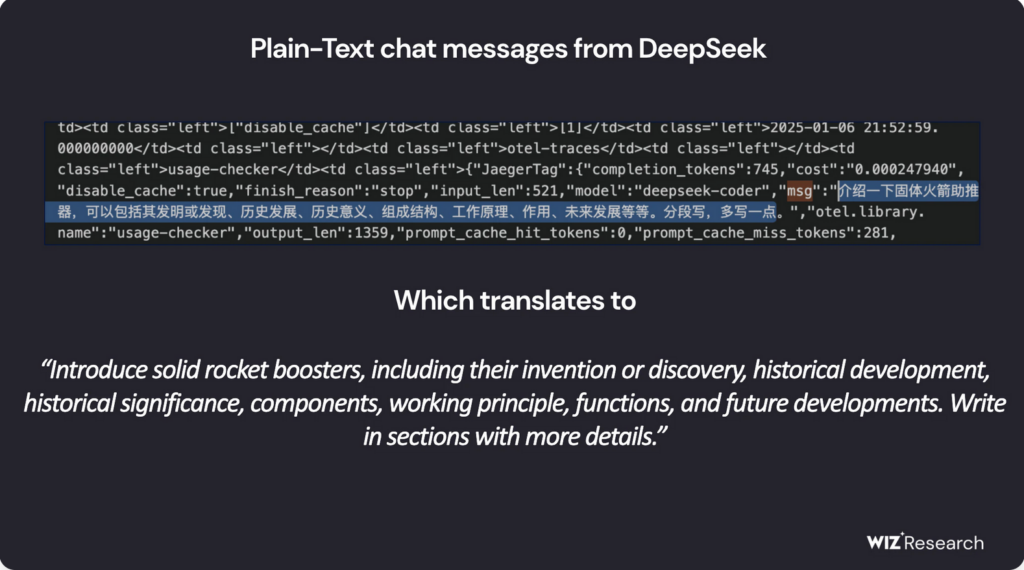

The exposed database reportedly contained over a million lines of log streams, including chat histories, backend details, API secrets, secret keys, and other critical operational metadata. Following multiple contact attempts from Wiz, DeepSeek has since secured the vulnerability.

It is worth mentioning that at the time this article was published, we were unable to register for DeepSeek AI’s service, as the following message showed up:

DeepSeek Allowing Unrestricted Access to Sensitive Data

The compromised database, hosted on oauth2callback.deepseek[.]com:9000 and dev.deepseek[.]com:9000, allowed unrestricted entry to a broad spectrum of confidential data. Wiz researchers warned that the exposure could have led to complete database control, unauthorized privilege escalation, and data exploitation – without the need of any authentication.

Furthermore, attackers could have used ClickHouse’s HTTP interface to execute SQL queries directly from a web browser. At this point, it remains uncertain whether any malicious actors managed to infiltrate or extract the exposed data before the issue was resolved.

“The rapid adoption of AI services without adequate security measures presents serious risks,” Nagli stated in a comment to The Hacker News. “While discussions around AI security often focus on long-term threats, immediate dangers frequently stem from fundamental security oversights—such as accidental database exposure.”

He further emphasized that protecting user data must remain a top priority, urging security teams to work closely with AI developers to prevent similar incidents in the future.

DeepSeek Under Regulatory Scrutiny

DeepSeek has recently gained recognition for its cutting-edge open-source AI models, positioning itself as a formidable competitor to industry leaders like OpenAI. Its R1 reasoning model has been dubbed “AI’s Sputnik moment” for its potential to disrupt the field.

The company’s AI chatbot has surged in popularity, topping app store rankings on both Android and iOS across multiple countries. However, its rapid expansion has also made it a target for large-scale cyberattacks, prompting DeepSeek to temporarily suspend user registrations to mitigate security threats.

Beyond technical vulnerabilities, the company has also drawn regulatory scrutiny. Privacy concerns surrounding DeepSeek’s data practices, coupled with its Chinese origins, have raised national security alarms in the United States.

Legal Challenges

In a significant development, Italy’s data protection regulator recently requested details on DeepSeek’s data collection methods and training sources. Shortly thereafter, the company’s apps became unavailable in Italy, though it remains unclear whether this move was a direct response to regulatory inquiries.

Meanwhile, DeepSeek is also facing allegations that it may have improperly leveraged OpenAI’s application programming interface (API) to develop its own models. Reports from Bloomberg, The Financial Times, and The Wall Street Journal indicate that both OpenAI and Microsoft are investigating whether DeepSeek engaged in an unauthorized practice known as AI distillation—a technique that involves training models on outputs generated by another AI system.

More about AI Distillation

AI distillation, also known as knowledge distillation, is a technique in machine learning where a smaller, more efficient AI model is trained using the outputs of a larger, more complex model. This method allows developers to transfer knowledge from a powerful AI (the teacher model) to a lightweight AI (the student model) while preserving its capabilities.

Originally designed to enhance efficiency and reduce computational costs, distillation has become a widely used practice in AI development. However, when done without proper authorization — such as extracting knowledge from proprietary AI models—it raises serious ethical and legal concerns.

“We are aware that groups in [China] are actively working to replicate advanced U.S. AI models through techniques such as distillation,” an OpenAI representative told The Guardian.

With DeepSeek’s rapid growth in the AI sector, concerns surrounding its security, regulatory compliance, and ethical data practices have intensified. The company’s approach to these issues will be a key factor in forming its long-term success in the global AI landscape.